One of the myths in decision making is that we get selective in gathering information than being objective! Thanks to the business intelligence tools, which help reveal hidden patterns in the data to broaden the scope of information and helping people think out of the box. The SQL Server datamining addins for Excel 2007 have a very rich and wide range of business intelligence features including data key influences, forecasting and trend annalysis, categorizing the data, identifying annomalies in the data (exception highlighting), and shoping basket annalysis etc.

The most cool thing about these addins is, that managers, data-analysts, and decision makers can do alot without any substantial technical IT background!

Note: the installation and configuation procedure is at the end of this discussion:

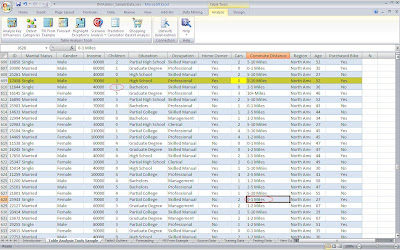

Here is Sample Base Customer Data of a Byke Selling Company (click on the image below):

The company's marketing and sales people need to build future strategy and inline future campaigns to make sure that they hit more sales and attract high value customers! The focus is;

- Potential customers: i.e., what type of people buy a byke and which ones that do not. (eg, a car owner is probably not going to buy a byke, however those with a substantial commute distance with no car is a potential byke buyer)

- Categories and hidden patterns in the data:

- Sales forecasting

- Buyer trends

Below are some of the examples of datamining features!

Annalyse Key Influencers:

This feature shows, how much impact/influence the other columns of the sample data put on a target column. in this case, we have selected the influence of all columns on Byke Purchase column to see what characteristics in other columns impact on byke purchase!

Below is the result!

Here, you see that the result shows the people with zero cars are the most valuable customers and this helps the management save a lot of money spending on marketing campaigns targeting irrelevant people!

Detect Categories:

This feature mines the data and then classifies the data into very interesting categories!

Excel 2007 will add the category column to the source data as well.

Forecasting:

Given below is the sample of region wise monthly sales!

Below is the forecast report for the sample data above!

The estimated sales have also been added to the actual source data:

Forecast Graph report!

Buyer Trends: shopping Basket Annalysis:

Sampe data of customer orders and trends of items purchased!

The annalysis shows that with a particular item, what other items are purchased in a single order; so that relevant items are placed together for sales; that's how METRO, WallMart and other large cash & carry stores do to hit more sales!

Below are the results of shopping basket annalysis below for the above sample data!

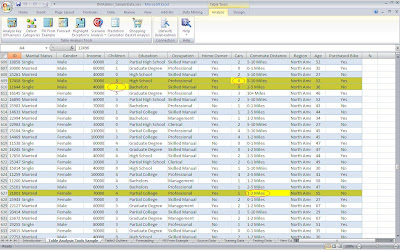

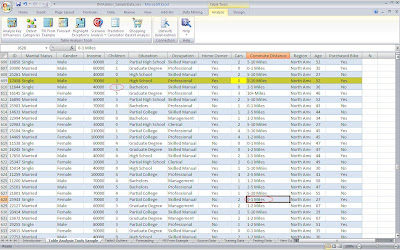

Highlight Exceptions:

This feature mines the data and detects annomalies/outfitters with in the data patterns!

Here's how we use this feature:

Below are the examples of exceptions highlighted by the tool:

Summary of exceptions found!

Exceptions in the data (outliers). the row is marked yellow with the highlighted actual field causing exception.

If you fix the annomaly by changing the field, the exception goes away automatically!

Installation/Configuration Procedure:

Install SQL Server 2008 Annalysis Services.

Follow the link below for the details!

After the installation, connect the Annalysis Server through SQL Server Management Studio and open properties of the server.

Choose Show Advance (All) Properties

Set the below two options to TRUE:

- DataMining \ AllowAdHocOpenRowsetQueries

- DataMining \ AllowSessionMiningModels

Additionally, you can download the sample databases for SQL 2008 including Warehouse database to building you own mining models using Business Intelligence Studio!

Download the SQLServer2008 datamining Addins for Excel 2007:

Run the setup and install the datamining addins.

Run Excel 2007 and open the file :

c:\Program Files\Microsoft SQL Server 2008 DM Add-Ins\DMAddins_SampleData.xlsx

You will see a new button Data Mining appeared in the excel tool.

Note: if the datamining buttons do not appear in the excel tool: cleck the excel options from the menu and choos ADDINS ; under the manage options; choose exceladdins and make sure the datamining addins are selected.

Click on the dataming button, and from the list; choose CONNECTIONS and add connection to the annlaysis server.

You are ready to use Business Intelligence data mining features of Sql Server 2008 Annalysis Services.

Additional to datamining button, if you load data and format the data to a table; ANALYZE button appears in the menu that contain many Data mining features!

Please leave comments on the discussion if have any question/issue installing and configuring the setup!

Cheers!

.jpg)